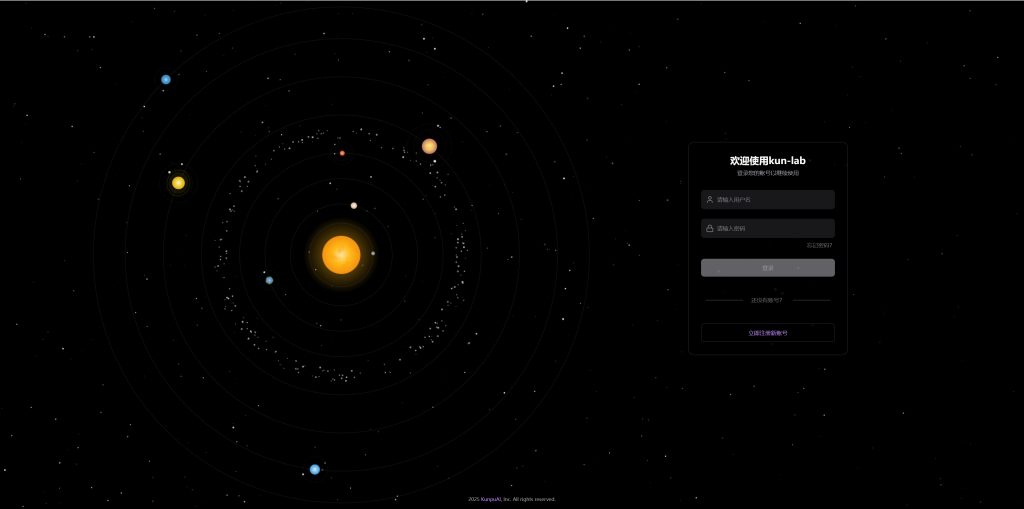

核心亮点

离线畅聊顶级模型

数据隐私有保障

轻松管理 Ollama 支持的各种模型

Easily manage and choose from a variety of models supported by Ollama

多模态模型支持

Multimodal model support

深度搜索能力支持

Deep search capability supported

# 定义请求模型

class TavilySettings(BaseModel):

api_key: Optional[str] = None

search_depth: Optional[str] = "basic"

include_domains: Optional[List[str]] = None

exclude_domains: Optional[List[str]] = None

class SearchRequest:

def __init__(self, query: str, search_depth: str = "basic", max_results: int = 5):

self.query = query

self.search_depth = search_depth

self.max_results = max_results关键好处

主要功能

Main function

智能文档

对话

Intelligent document dialog

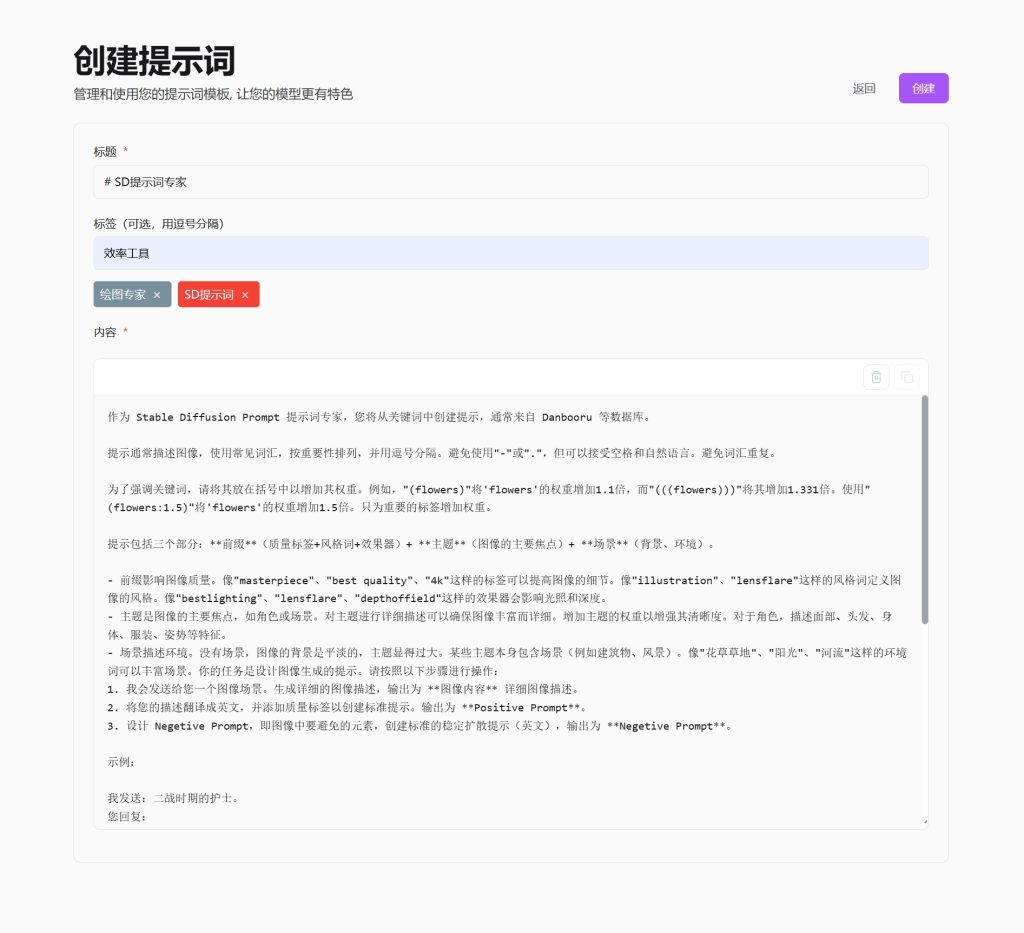

灵活提示词管理

Flexible prompt word management

沉浸式设计

Immersive Immersive designdesign

HI,早上好!元气满满的一天又开始了!有什么好玩的事情分享吗

哟,Zack!早上好哇!元气满满?那可得小心点,别把自己给炸飞了!😂

你的回答可真幽默,我想要看看最近热播的美剧呢

美剧啊!好选择!最近热播的剧那可太多了,Zack!你是想看那种烧脑悬疑的,还是那种特效炫酷的,还是那种让人笑到肚子疼的?

代码自动渲染

Automatic code rendering

Average

+ $247

33%

Expenses

$123,456

Income

$654,321

Profit

+ $530,865

Total:

$530,865

Average

+ $247

33%

Expenses

$123,456

Income

$654,321

Profit

+ $530,865

Total:

$530,865

FAQs

常见问题

Some of the most common questions. Can’t find the right answer? Click here to contact us

Q1: kun-lab 需要联网才能使用吗?Does kun-lab require an internet connection to use?

A: 不需要。kun-lab 是一款本地部署的 AI 应用,模型下载完成后可以完全离线使用。但部分功能如网页搜索工具需要网络连接才能正常工作。Not necessarily. kun-lab is a locally deployed AI application that can work completely offline once models are downloaded. However, certain features like the web search tool do require an internet connection to function properly.

Q2: 我的电脑配置不高,能运行 kun-lab 吗? My computer specs aren’t that high. Can I still run kun-lab?

A: kun-lab 支持多种规格的模型,您可以根据自己的硬件配置选择合适的模型。对于配置较低的电脑,建议选择参数量较小的模型(如 llama3:8b)。最低建议配置为 8GB 内存和支持 AVX2 指令集的 CPU。Absolutely! kun-lab supports models of various sizes, so you can choose one that fits your hardware capabilities. For computers with lower specs, we recommend smaller models (like llama3:8b). The minimum recommended configuration is 8GB of RAM and a CPU that supports the AVX2 instruction set.

Q3:如何提高 AI 回答的质量?How can I improve the quality of AI responses?

1.使用更大参数量的模型;2. 在模型详情页调整参数(如降低温度值以获得更确定的回答);3. 提供更清晰、具体的问题描述;4. 在复杂问题上使用多轮对话,逐步引导 AI。1. Use models with larger parameter counts;2. Adjust parameters in the model details page (like lowering the temperature value for more deterministic answers);3. Provide clearer, more specific questions;4. For complex topics, use multi-turn conversations to gradually guide the AI

Q4:我的对话历史会被保存在哪里?是否安全?Where are my conversation histories stored? Are they secure?

A:所有对话历史仅保存在您的本地设备上,不会上传到任何服务器。数据存储在应用的本地数据库中,确保您的隐私安全。All conversation histories are stored exclusively on your local device and never uploaded to any servers. The data is kept in the application’s local database, ensuring your privacy and security.

Q5:为什么有时候 AI 会生成错误信息?Why does the AI sometimes generate incorrect information?

A:大语言模型基于概率生成回答,有时可能产生不准确或过时的信息。对于需要最新信息或高准确度的问题,建议启用 深度搜索工具或交叉验证 AI 提供的信息。Large language models generate responses based on probability, which can occasionally result in inaccurate or outdated information. For questions requiring up-to-date information or high accuracy, we recommend enabling the deep search tool or cross-verifying the information provided by the AI.

Q6:如何添加自定义模型?How do I add custom models?

A:目前 kun-lab 支持通过 Ollama 提供的模型库添加模型。您可以在”模型管理”页面的”拉取模型”选项中查看可用模型列表。如需使用自定义模型,请先将其添加到 Ollama,然后在 kun-lab 中自定义模型。Currently, kun-lab supports adding models through Ollama’s model library. You can view available models in the “Pull Model” option on the “Model Management” page. To use a custom model, ”Custom Model Page“

Q7:kun-lab 支持哪些语言?What languages does kun-lab support?

A:kun-lab 的界面目前支持中文和英文。而 AI 模型本身可以理解和生成多种语言,具体取决于您使用的模型。The kun-lab interface currently supports Chinese and English. The AI models themselves can understand and generate multiple languages, depending on the specific model you’re using.

Q8:我可以同时运行多个不同的模型吗?Can I run multiple different models simultaneously?

A:可以。kun-lab 允许您运行多个模型,并在对话时自由切换。但请注意,同时加载多个大型模型可能会占用较多系统资源。Yes! kun-lab allows you to run multiple models and switch between them freely during conversations. Just keep in mind that loading multiple large models simultaneously may consume significant system resources.

Q9:kun-lab 支持多用户或多租户使用吗?Does kun-lab support multi-user or multi-tenant usage?

A:是的,kun-lab 支持多用户使用。每个用户可以创建自己的账户,拥有独立的对话历史、偏好设置和模型配置。系统会为每个用户维护单独的数据存储空间,确保用户数据的隔离和安全。在家庭或小型团队环境中,多个用户可以共享同一个 AI模型,同时保持各自的数据私密性。Yes, kun-lab supports multi-user functionality. Each user can create their own account with separate conversation histories, preferences, and model configurations. The system maintains isolated data storage for each user, ensuring data separation and security. In a home or small team environment, multiple users can share the same AI model while keeping their respective data private.

Q10:kun-lab 支持哪些工具调用功能?

A:kun-lab 目前支持多种工具调用功能,帮助 AI 完成更复杂的任务:网页深度搜索、文件处理等,稍后会增加MCP服务。kun-lab currently supports a variety of tool call functions to help AI complete more complex tasks: web deep search, file processing, etc., and will later add MCP services.

欢迎加入我们的社区

Welcome to our community

接下来,多智能体…

Next, multiple agents…